Next:

Contact:

Peter Mills

Previous:

Home

libagf is a Peteysoft project

Consider the following generalization of a k-nearest neighbours scheme:

|

(1) | ||

|

(2) |

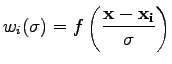

where

|

(3) |

where

The parameter, ![]() , is equivalent to the number of nearest

neighbours in a k-nearest-neighbours classifier and is held fixed by

varying the filter width. This keeps a uniform number of samples

within the central region of the filter.

, is equivalent to the number of nearest

neighbours in a k-nearest-neighbours classifier and is held fixed by

varying the filter width. This keeps a uniform number of samples

within the central region of the filter.

An obvious choice for ![]() would be a Gaussian:

would be a Gaussian:

| (4) |

Where the upright brackets denote a metric, typically Cartesian. The width of the Gaussian can be effectively varied by simply squaring the weights, thus they may be pre-calculated for an initial, trial value of

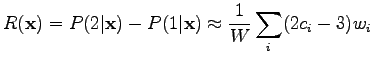

The primary advantage of the above over a k-nearest-neighbours, is that

it generates estimates that are both continuous and differentiable.

Both features may be exploited, first to find the class

borders, then to perform classifications and estimate the

conditional probability. Let ![]() be

the difference in conditional probabilities between two classes:

be

the difference in conditional probabilities between two classes:

|

(5) |

Where

The class of a test point is estimated as follows:

where

This algorithm is robust, general and efficient, yet still supplies knowledge of the conditional probabilities which are useful for gauging the accuracy of an estimate without prior knowledge of its true class.

References

Terrel and Scott (1992). "Variable kernel density estimation." Annals of statistics 20:1236-1265.

Peter Mills 2007-11-03

![$\displaystyle \frac{\partial R}{\partial x_j} \approx \frac{1}{W \sigma^2}

\su...

...[x_{ij}-x_j - d_i^2 \frac{\sum_k

w_k (x_{kj}-x_k)} {\sum_k d_k^2 w_k} \right ]$](img20.png)